Introduction

I am sure most of you are familiar with set-based X++ CUD operators: insert_recordset, update_recordset and delete_from. They allow performing database operations with a large number of records in a single roundtrip to the server, instead of a row-by-row type of operation, which depends on the number of rows being processed. As a result, they can provide a very significant boost in overall performance of a selected flow.If not familiar or just need a refresher, you can read more about it by following the link to MSDN.

Problem statement

There's however one significant drawback with these operators - they are all compile-time constructs, so they lack the flexibility of the flow modifying the database request before it is set based on runtime state of the flow.And, once the application is sealed, that will mean there is no way to modify the request even at compile-time, as X++ statements are not extensible.

Solution

In Microsoft Dynamics AX 2012 R3 a static method was added on the Query class, that allows to solve the two problems above for insert_recordset. This is of course now also available in Microsoft Dynamics 365 for Finance and Operations: Enterprise edition.Note: update_recordset and delete_from are still not supported through the Query class.

Example

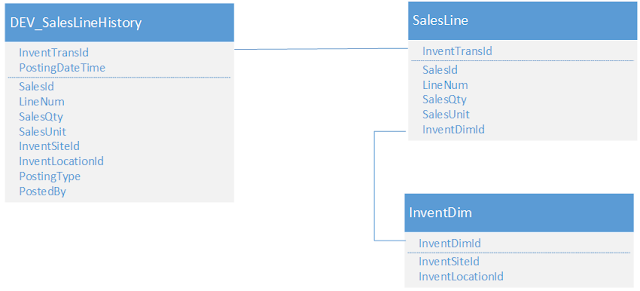

Imagine that we need to write a function that would copy selected sales order line information into a sales order line history table for one or more orders.1. Data model

Here's how the data model for this table is defined in this example: |

| DEV_SalesLineHistory data model diagram |

2. New DEV_SalesLineHistory table in Visual Studio

And here's how the new table looks in Visual Studio (I created a new model for it in a separate package dependent on Application Suite): |

| DEV_SalesLineHistory table |

3. Copy function through regular X++ insert_recordset statement

Let's first write the statement for inserting the history records using the regular insert_recordset operator:

public class DEV_Tutorial_InsertRecordset

{

public static Counter insertXppInsert_Recordset(SalesId _salesId)

{

DEV_SalesLineHistory salesLineHistory;

SalesLine salesLine;

InventDim inventDim;

JournalPostedDateTime postedDateTime = DateTimeUtil::utcNow();

JournalPostedUserId postedBy = curUserId();

SalesDocumentStatus postingType = DocumentStatus::PackingSlip;

insert_recordset salesLineHistory

(

SalesId,

LineNum,

InventTransId,

SalesQty,

SalesUnit,

InventSiteId,

InventLocationId,

PostedDateTime,

PostedBy,

PostingType

)

select SalesId, LineNum, InventTransId, SalesQty, SalesUnit from salesLine

where salesLine.SalesId == _salesId

join InventSiteId, InventLocationId, postedDateTime, postedBy, postingType from inventDim

where inventDim.InventDimId == salesLine.InventDimId;

return any2int(salesLineHistory.RowCount());

}

}As you can see, we do a simple select from SalesLine, specifying the exact fields, joined to selected fields from InventDim, where the field list also contains a few local variables to populate into the rows being inserted.

This is the standard syntax used with X++ insert_recordset statement, which all of you are familiar with.

4. Method signature for Query::insert_recordset()

Now let's convert the above to a Query, and call Query::insert_recordset() instead.

This method accepts three arguments:

As you can see from the above, it does not account for literals, as we did with variables in the X++ operator example.

That is currently not supported with this API.

We can however solve this through a use of a "temporary" table, as suggested below.

This method accepts three arguments:

- An instance of a table record. This is where data will be inserted into. We can then use this variable to ask how many rows were inserted, for example.

- An instance of a Map(Types::String, Types::Container), which defines the mapping of the fields to copy. In X++ operator, this had to be based on the specific order in the field selection lists in the select statement.

- The map key is the target field name.

- The value is a container, which defines a pair of values:

- the unique identifier of the QueryBuildDataSource object points to the table to copy the value from

- the field name on the above data source to copy the value from

- An instance of a Query class, which defines the select statement for the data, similar to what you see in the X++ version above.

As you can see from the above, it does not account for literals, as we did with variables in the X++ operator example.

That is currently not supported with this API.

We can however solve this through a use of a "temporary" table, as suggested below.

5. Define a new table to store calculable literals

Let us define a new table that will store the data required by our insert statement. That means it needs to contain four fields:

- PostedDateTime

- PostedBy

- PostingType

- SalesId - we'll use this to join to SalesLine. This could be sessionId or whatever is required to ensure concurrency and uniqueness

Here's how the table would look in Visual Studio designer:

|

| DEV_SalesLineHistoryPostingDataTmp table definition |

We can now populate this table with the required values and join it to our query.

After executing the bulk insert we can then delete the inserted row (if necessary).

Another possible implementation here could be to use a View, with computed columns for the different literal values needed. You could select from a table that has only 1 row, like InventParameters or the like. This is however less flexible, as it'll be compiled in, while with a "temporary" table you could determine the values at runtime.

6. Write up the code using the Query::insert_recordset() method

Now we are all set to write the necessary code. It would look like below:

public class DEV_Tutorial_InsertRecordset

{

public static Counter insertQueryInsert_Recordset(SalesId _salesId)

{

DEV_SalesLineHistory salesLineHistory;

Query query = new Query();

QueryBuildDataSource qbdsSalesLine = query.addDataSource(tableNum(SalesLine));

qbdsSalesLine.addSelectionField(fieldNum(SalesLine, SalesId));

qbdsSalesLine.addSelectionField(fieldNum(SalesLine, LineNum));

qbdsSalesLine.addSelectionField(fieldNum(SalesLine, InventTransId));

qbdsSalesLine.addSelectionField(fieldNum(SalesLine, SalesQty));

qbdsSalesLine.addSelectionField(fieldNum(SalesLine, SalesUnit));

qbdsSalesLine.addRange(fieldNum(SalesLine, SalesId)).value(queryValue(_salesId));

QueryBuildDataSource qbdsInventDim = qbdsSalesLine.addDataSource(tableNum(InventDim));

qbdsInventDim.addSelectionField(fieldNum(InventDim, InventLocationId));

qbdsInventDim.addSelectionField(fieldNum(InventDim, InventSiteId));

qbdsInventDim.relations(true);

QueryBuildDataSource qbdsPostingData = qbdsInventDim.addDataSource(tableNum(DEV_SalesLineHistoryPostingDataTmp));

qbdsPostingData.addLink(fieldNum(SalesLine, SalesId), fieldNum(DEV_SalesLineHistoryPostingDataTmp, SalesId), qbdsSalesLine.name());

qbdsPostingData.addSelectionField(fieldNum(DEV_SalesLineHistoryPostingDataTmp, PostedDateTime));

qbdsPostingData.addSelectionField(fieldNum(DEV_SalesLineHistoryPostingDataTmp, PostedBy));

qbdsPostingData.addSelectionField(fieldNum(DEV_SalesLineHistoryPostingDataTmp, PostingType));

Map targetToSourceMap = new Map(Types::String, Types::Container);

targetToSourceMap.insert(fieldStr(DEV_SalesLineHistory, SalesId), [qbdsSalesLine.uniqueId(), fieldStr(SalesLine, SalesId)]);

targetToSourceMap.insert(fieldStr(DEV_SalesLineHistory, LineNum), [qbdsSalesLine.uniqueId(), fieldStr(SalesLine, LineNum)]);

targetToSourceMap.insert(fieldStr(DEV_SalesLineHistory, InventTransId), [qbdsSalesLine.uniqueId(), fieldStr(SalesLine, InventTransId)]);

targetToSourceMap.insert(fieldStr(DEV_SalesLineHistory, SalesQty), [qbdsSalesLine.uniqueId(), fieldStr(SalesLine, SalesQty)]);

targetToSourceMap.insert(fieldStr(DEV_SalesLineHistory, SalesUnit), [qbdsSalesLine.uniqueId(), fieldStr(SalesLine, SalesUnit)]);

targetToSourceMap.insert(fieldStr(DEV_SalesLineHistory, InventLocationId), [qbdsInventDim.uniqueId(), fieldStr(InventDim, InventLocationId)]);

targetToSourceMap.insert(fieldStr(DEV_SalesLineHistory, InventSiteId), [qbdsInventDim.uniqueId(), fieldStr(InventDim, InventSiteId)]);

targetToSourceMap.insert(fieldStr(DEV_SalesLineHistory, PostedDateTime), [qbdsPostingData.uniqueId(), fieldStr(DEV_SalesLineHistoryPostingDataTmp, PostedDateTime)]);

targetToSourceMap.insert(fieldStr(DEV_SalesLineHistory, PostedBy), [qbdsPostingData.uniqueId(), fieldStr(DEV_SalesLineHistoryPostingDataTmp, PostedBy)]);

targetToSourceMap.insert(fieldStr(DEV_SalesLineHistory, PostingType), [qbdsPostingData.uniqueId(), fieldStr(DEV_SalesLineHistoryPostingDataTmp, PostingType)]);

ttsbegin;

DEV_SalesLineHistoryPostingDataTmp postingData;

postingData.PostedDateTime = DateTimeUtil::utcNow();

postingData.PostedBy = curUserId();

postingData.PostingType = DocumentStatus::Invoice;

postingData.SalesId = _salesId;

postingData.insert();

Query::insert_recordset(salesLineHistory, targetToSourceMap, query);

delete_from postingData

where postingData.SalesId == _salesId;

ttscommit;

return any2int(salesLineHistory.RowCount());

}

}

As you can see, the first part of the code builds a query using the QueryBuild* class hierarchy. The query is identical to the above select statement, with the addition of another join to our "tmp" table to retrieve the literals.

The second part populates the target to source Map object, which maps the fields to insert into to their source.

The third part actually invokes the operation, making sure we have the record populated in our "tmp" table beforehand.

Note. Because the first argument is the record we insert into, we can use it to get the RowCount(), telling us how many records have been inserted.

7. Extensibility aspect

Leaving the code just like it is does not actually make it extensible, as the partners would not be able to add additional fields to copy, or additional conditions/joins to the query. To accomplish this, we'd need to break the logic out into smaller methods with specific responsibilities, or add inline delegates to do the same. Generally speaking, you should always favor breaking the code down into simpler methods over delegates.

I've not done this in the example, as that's not the purpose, but you should follow these guidelines in production code.

8. Execute and test the code

We can execute these methods now, but first we need to ensure we have a test subject, aka a Sales order to practice with. Here's the one I used in the example:

|

| A sales order with 3 order lines |

And here's the Runnable Class that invokes the two methods above:

class DEV_Tutorial_InsertRecordset_Runner

{

public static void main(Args _args)

{

const SalesId SalesId = '000810';

if (SalesTable::exist(SalesId))

{

Counter xppInsert = DEV_Tutorial_InsertRecordset::insertXppInsert_Recordset(SalesId);

Counter queryInsert = DEV_Tutorial_InsertRecordset::insertQueryInsert_Recordset(SalesId);

strFmt('Tutorial Insert_Recordset');

info(strFmt('Inserted using X++ insert_recordset = %1', xppInsert));

info(strFmt('Inserted using Query::insert_recordset() = %1', queryInsert));

}

}

}